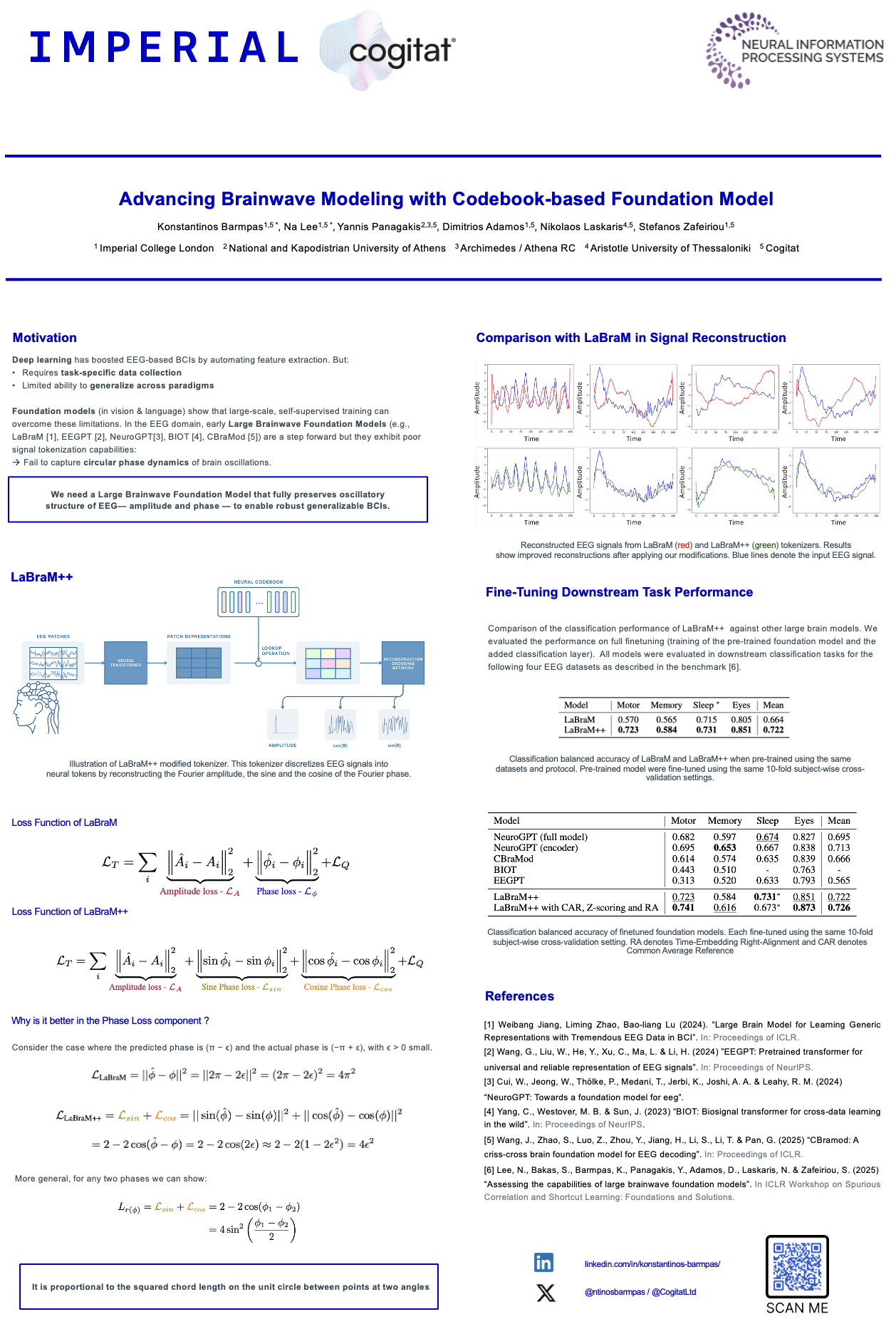

Advancing Brainwave Modeling with a Codebook-Based Foundation Model

Published in NeurIPS 2025 - Foundation Models for the Brain and Body Workshop, 2025

Authors: Konstantinos Barmpas*, Na Lee*, Yannis Panagakis, Dimitrios Adamos, Nikolaos Laskaris and Stefanos Zafeiriou

[Workshop Page] - [Conference Page]

Recent advances in large-scale pre-trained Electroencephalogram (EEG) models have shown great promise, driving progress in Brain-Computer Interfaces (BCIs) and healthcare applications. However, despite their success, many existing pretrained models have struggled to fully capture the rich information content o neural oscillations, a limitation that fundamentally constrains their performance and generalizability across diverse BCI tasks. This limitation is frequently rooted in suboptimal architectural design choices which constrain their representational capacity. In this work, we introduce LaBraM++, an enhanced Large Brainwave Foundation Model (LBM) that incorporates principled improvements grounded in robust signal processing foundations. LaBraM++ demonstrates substantial gains across a variety of tasks, consistently outperforming its originally-based architecture and achieving competitive results when compared to other open-source LBMs. Its superior performance and training efficiency highlight its potential as a strong foundation for future advancements in LBMs.

NeurIPS2025 Foundation Models for the Brain and Body Workshop Poster